Real-time Analytics and Power BI

Real-time Analytics is a way of analysing the data as soon as it’s generated. Data is processed as it arrives and the business gets insights delivered without any delay.

Real-time Analytics is useful when you are looking to build analytics and reporting that you need to respond to quickly. It’s how we ensure the analysis is updated with the latest available data, when that data updates constantly. It’s particularly useful in what we class at Oxi Analytics as “sense and respond” analytical use cases.

These sense and respond use cases are usually found where small but quick changes in a process will make a significant impact on the result, where risks are trying to be minimised or where we need to quickly identify changing patterns to avert serious damage to an area of our business, such as sudden and unexpected changes in customer behaviour. They are found across various business verticals and industry sectors, particularly in brand monitoring, digital marketing, manufacturing etc.

Power BI’s real-time analytics features are used by organisations across the world such as TransAlta, Piraeus Bank S.A, Intelliscape.io etc., You can read more about these use cases here.

Power BI delivers real-time Analytics capabilities with its real-time streaming features. Let’s explore this more to learn about its capabilities in depth and also importantly, it’s limitations.

Real-time Streaming in Power BI

Real-time streaming allows you to stream data and update dashboards in real-time. Any visual or dashboard that makes use of a real-time streaming dataset in Power BI can display and update real-time data.

Types of Real-time datasets

There are three types of Power BI real-time streaming datasets designed for displaying real-time data:

- Push datasets

- Streaming datasets

- PubNub streaming datasets

Only the Push dataset allows historical data to be stored. If we want to build real-time analytical reports which show the historic data as well as the latest changes, we need to use the Push dataset. The other two datasets, Streaming datasets and PubNub streaming data sets are used when we want to create dashboard tiles to showcase only the latest data point.

Here’s a table listing the major differences between all three datasets. You can find more information here.

|

Capability |

Push |

Streaming |

PubNub |

|

Update Dashboard tiles in real-time |

Yes. Allowed with visuals created via reports and then pinned to dashboard |

Yes. Allowed for custom streaming tiles added directly to the dashboard |

Yes. For custom streaming tiles added directly to the dashboard |

|

Data stored permanently in Power BI for historic analysis |

Yes |

No, it’s only stored temporarily for an hour. |

No |

|

Ability to build reports atop the data |

Yes |

No |

No |

Push Dataset

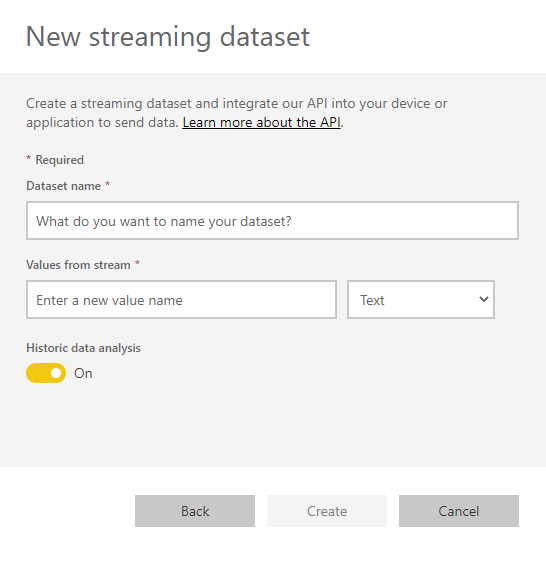

This is a special case of a streaming dataset. While creating a streaming dataset in Power BI, if the ‘Historic data Analysis’ option is enabled, it results in a Push dataset. Once this dataset is created, the Power BI service automatically creates a new database to store the data.

Reports can be built on these datasets like any other dataset. Power BI doesn’t allow for any transformations to be performed on this dataset and it cannot be combined with other data sources either. However, it allows for adding measures to the existing table. Data can also be deleted using the REST API call.

Streaming Dataset

Data gets pushed here as well but there’s an important difference. Power BI stores the data in a temporary cache, which quickly expires. The temporary cache can only be used to display visuals, which have some transient sense of history, such as a line chart that has a time window of one hour.

Since there’s no underlying database created, you cannot build reports using this data. Also, you cannot make use of report functionality such as filtering, custom visuals etc.

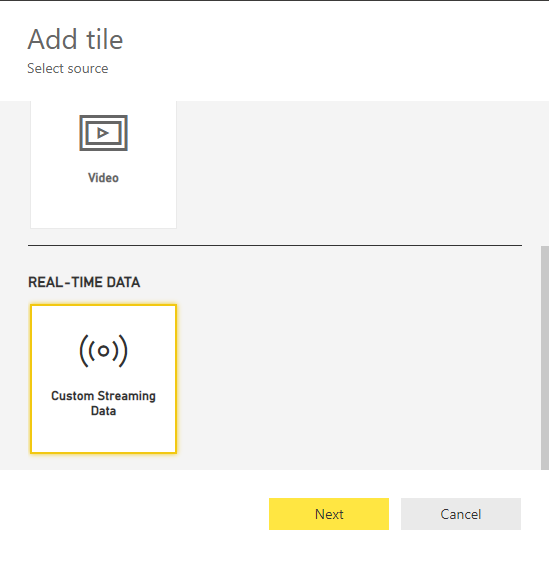

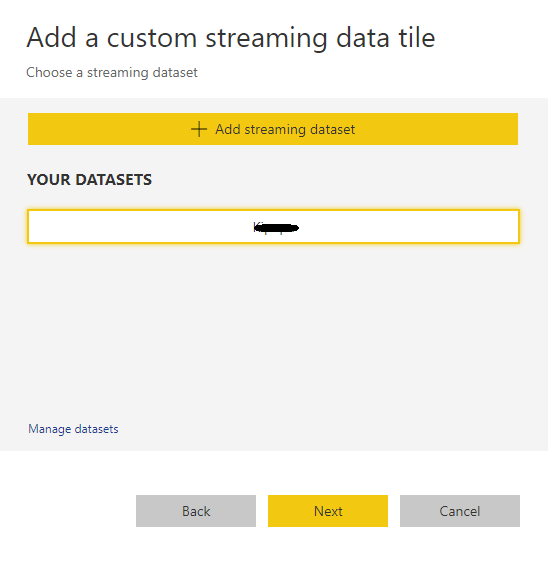

The only way to visualise this data is by creating a dashboard and adding a tile with “Custom Streaming Data” under the Real-Time Data section.

PubNub Dataset

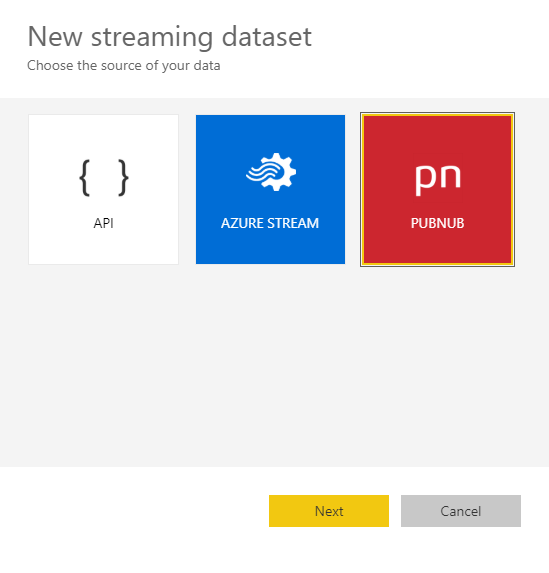

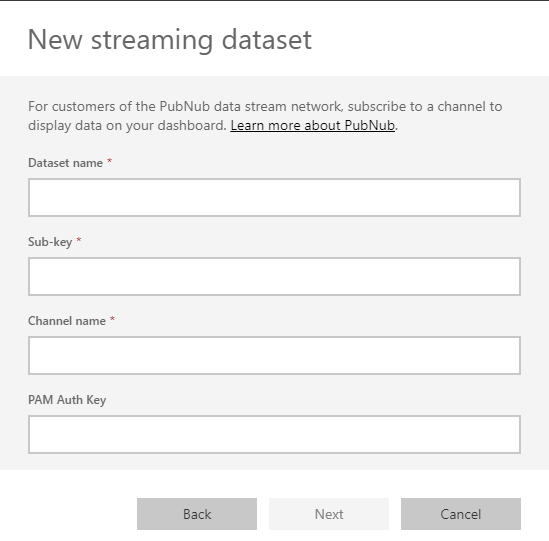

With this dataset, the Power BI web client uses the PubNub SDK to read an existing PubNub data stream. No data is stored by the Power BI service. PubNub is a third-party data service.

As with a streaming dataset, there is no underlying database in Power BI, so you cannot build report visuals against the data that flows in, and cannot take advantage of the other report functionalities. It can only be visualised by adding a tile to the dashboard, and configuring a PubNub data stream as the source.

Any web or mobile application which uses the PubNub platform for real-time data streaming could be used here.

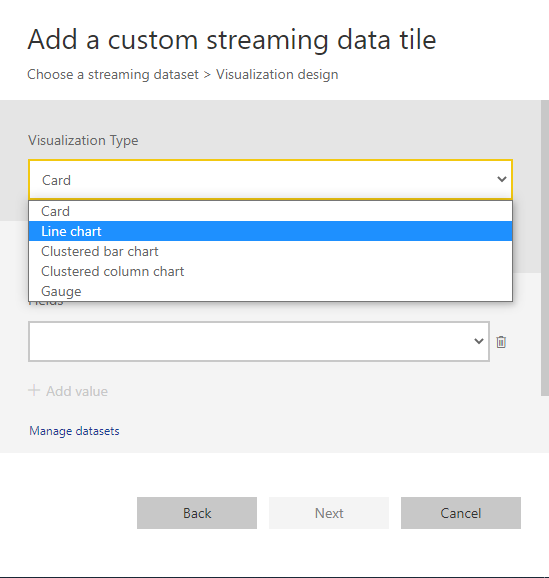

In general, when using a custom streaming dashboard tile, you can choose from five different visualisation types as shown in the below screenshot. These tiles, when added to the dashboard, will have a lightning bolt icon at the top left corner, indicating that they are real-time data tiles.

How to Choose a Dataset Type?

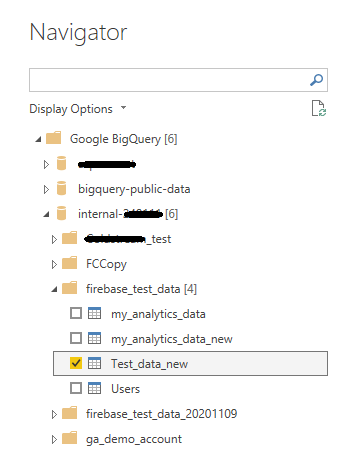

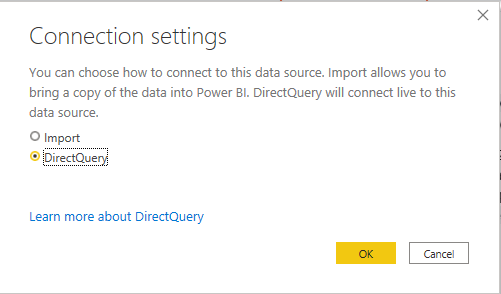

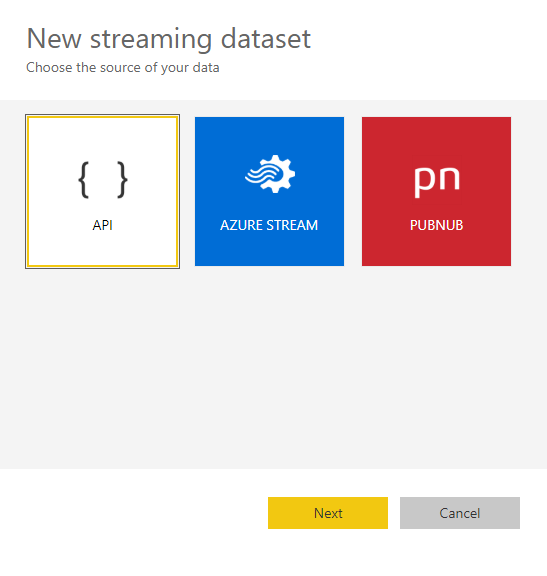

A Push dataset can be used when historic data analysis and building reports atop the dataset are crucial. The dataset can be created using the API option in the streaming dataset UI. It can be connected using either Power BI service or the Power BI Desktop.

A Push dataset can also be created using the ‘Azure Stream’ option. However, the dataset created using this method can only store a maximum of 200,000 rows. After hitting the limit, rows are dropped in a FIFO (first-in, first-out) fashion.

If the idea is to have dashboard tiles displaying pre-aggregated live data using simple visuals, then a streaming dataset is the perfect choice. This can only be connected using the Power BI service.

PubNub datasets are used when the data is generated using the PubNub data stream. Tiles created using this dataset are optimized for displaying real-time data with very little latency.

I hope that helped in answering some of the questions on real-time analytics using Power BI. Please feel free to contact me through contact@oxianalytics.xyz if you have any further questions I can help with.