The next agricultural revolution is upon us, and farms with big data initiatives are set to see big benefits.

With the world population set to reach 8.5 billion by the year 2030, farming businesses are facing enormous pressures to innovate—and fast. The biggest farms are already using technology to manage their activities, especially in the U.S. where automation is being used to produce more food than ever before.

Now it’s time for the smaller farms to embrace the digital transformation. Large economic potential is linked to big data. And we think it can secure the fortunes of a new generation of digitally savvy farming professionals—as long as you know what it can do and how you can use it.

Building a profitable farm business

Like any business, your farm’s profitability depends on two key drivers: using your inputs to maximise your outputs, and using assets efficiently. For arable farms, inputs are your seeds, fertiliser, agrochemicals, fuel, electrical energy and variable labour. Among your many assets, you have machinery, buildings and of course, the land itself.

On the other side of the equation, you have your outputs. Crops are the obvious output, but we must also talk about the environmental benefits of your farming business as sustainability has a market value in a modern economy.

Productivity is the art of getting the most outputs for your inputs, and that requires careful tracking. Managing your farm without monitoring everything you do is like driving a car with a blindfold. But, whereas once you might have relied on a closeness and understanding of the land to assess yields and predict your productivity, now we have data.

And it’s set to take agriculture to new heights.

New levels of precision, field by field

Fields differ greatly in their productive potential. Soil type, soil conditions, crop choices, preparatory treatments, seed varieties, fertiliser choices, crop protection and application rates and timings all have a major impact. To boost productivity, farmers can benefit from a wealth of rich data from universities and science labs, specifying the benefits of innovative products and new techniques.

Seed, fertiliser and crop-protection manufacturers also put out a ton of guidance on the optimum use of their products—though some of their claims are not independently verified.

Now, thanks to the roll out of 4G and 5G, it’s possible to optimise production even further, right down to the level of the individual field. Sensors placed strategically around fields let farmers ‘see’ their crops from anywhere over large areas, without ever venturing into the field.

Field sensors can tell you to apply different amounts of fertilizer in different parts of a field. Water monitors can give you up-to-the-minute data on how much moisture crops are receiving. These sensors send information in real-time, providing massive insights into crop health.

For crop spreading, spraying and monitoring, we’re seeing an increasing use of drones. These aerial devices are massively time and labour-saving—imagine the return on investment if farmers could seed, spray and satellite-image the soil using a driverless aerial source, without having to charter a plane.

Closer to earth, sensors placed on tractors and farm equipment can provide a wealth of data, from crop inspections to when your machine needs servicing. Farm machines are also an early candidate for autonomous driving, further improving farm efficiency.

Using the past to predict the future

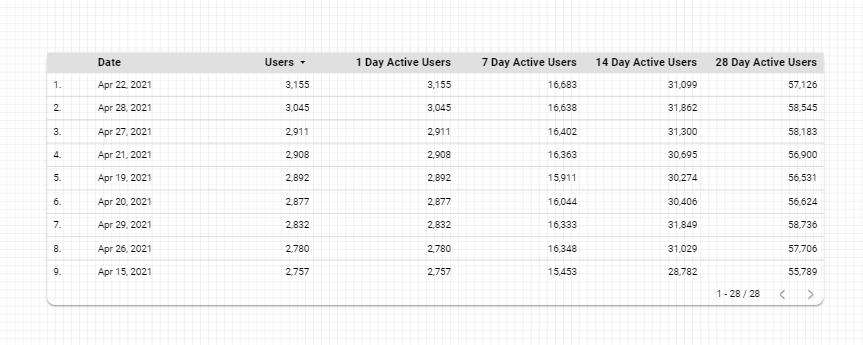

The ability to remotely monitor crops is one thing; being able to predict outcomes is something else. With predictive analytics, a type of statistical modelling, you can use the real-time data collected from fields and combine it with data from the past to predict what currently is happening and what is going to happen. This makes it easier to make decisions that impact the bottom line.

For instance, unexpected weather variations can impact crop yields, and certain crops under certain conditions are susceptible to pests. Inputting a bunch of variables such as weather, soil types, temperatures and moisture levels can help you apply water, fertiliser, pesticides and so on in more precise quantities, specific to crops at the field level, to increase productivity.

Together, these technologies can help you make timely interventions to reduce losses and even enhance yields economically—resulting in higher production with less waste, which is exactly what drives profitability.

Protecting revenue and controlling costs

Both crop revenues and input costs are susceptible to the pricing gyrations that are inevitable in commodity markets—sometimes exacerbated by government interventions. Some, but not all, of these variations can be hedged. Still, most farmers, as they go through the year, will have an up-to-date prediction of the revenues and profitability they expect to realise at the end of their financial year.

Data is another weapon in the arsenal, ensuring the accuracy of your predictions.

Overall, optimisation has led to higher yields and higher gross profits, though the percentages vary by farm type and size. Cropping enterprises manage 61% Gross Profit on average. Input costs for seeds, fertilisers and crop protection amount to 90% to 95% of variable costs. As we have seen, different fields have different yield potentials. The law of diminishing returns applies, too, so at some point, adding more fertiliser is not going to raise your output.

In other words, there’s an optimum productivity target that can be set for each field and each crop, as well as an overall optimal efficiency level for every farm. Optimising the ratio between yield and inputs at the field level is a major driver of improved gross profits.

Securing the future of the farm … and the planet

Small, family-run farms make up the bulk of the farming sector globally and here, farming remains a low-tech, low-yield enterprise. But falling costs means that data and analytics tools will soon be accessible to the many. On a global scale, data, analytics and automation have the potential to transform agricultural productivity and sustainability, fighting hunger and slowing climate change.

It is a compelling economic reason to roll-out digital infrastructure globally.

Closer to home, data can help you understand and record the direct costs and opportunity costs of the actions you are taking to protect the environment, protect wildlife and mitigate climate change. This supports a claim for the government payments that are made to reward farmers for their stewardship of the countryside.

Some of the government payments to help farmers to be productive and sustainable should arguably be directed to establishing the infrastructure needed to support the new tech. Improving the profitability of farms will attract more investment into the sector and encourage a positive cycle of investment, increasing productivity and sustainability.

It all helps you increase the quality and predictability of earnings (EBITDA), and therefore the value of the farm. This in turn attracts investors to the farming sector and backing for farm managers with proven effectiveness.

Small farm, meet big data

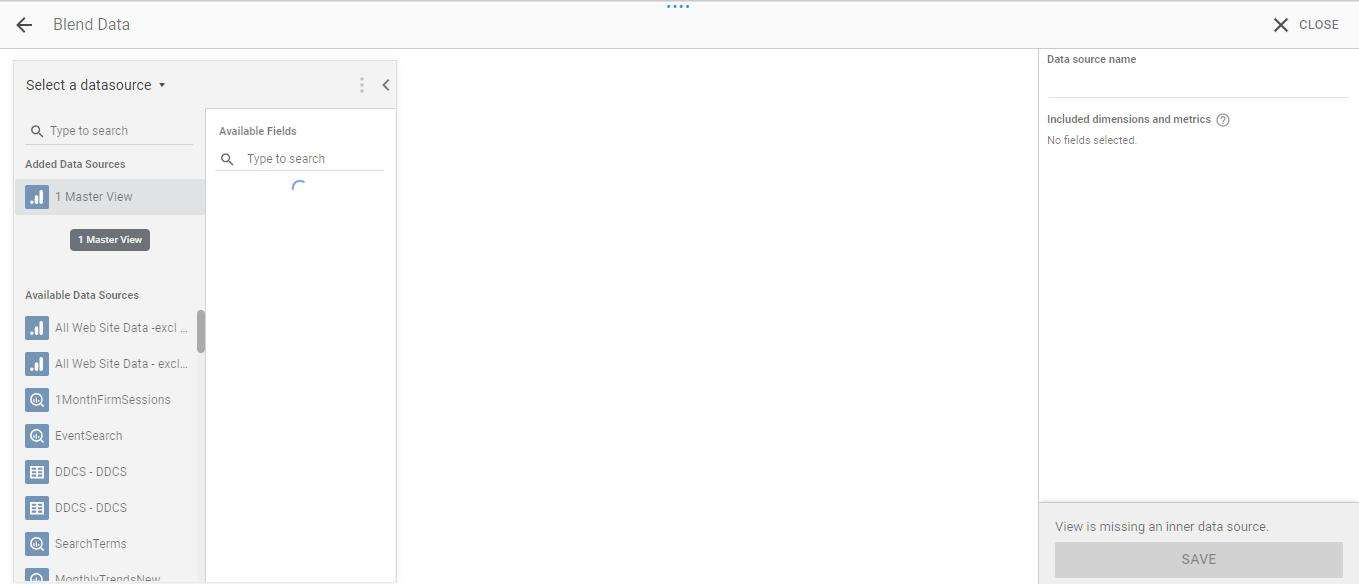

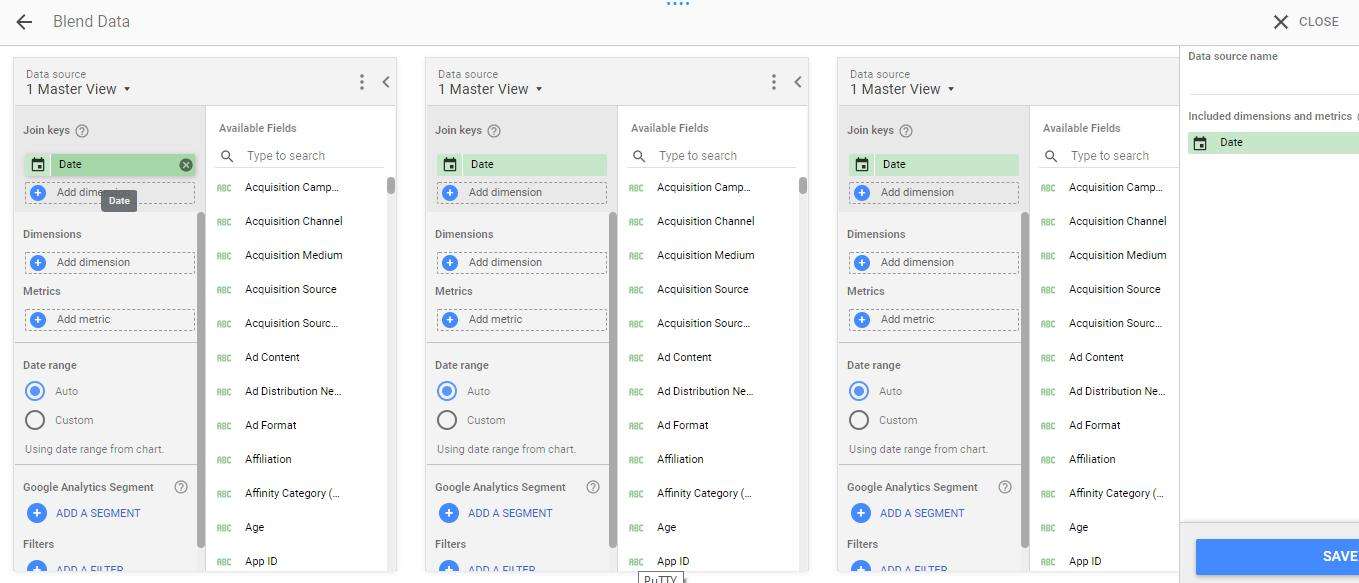

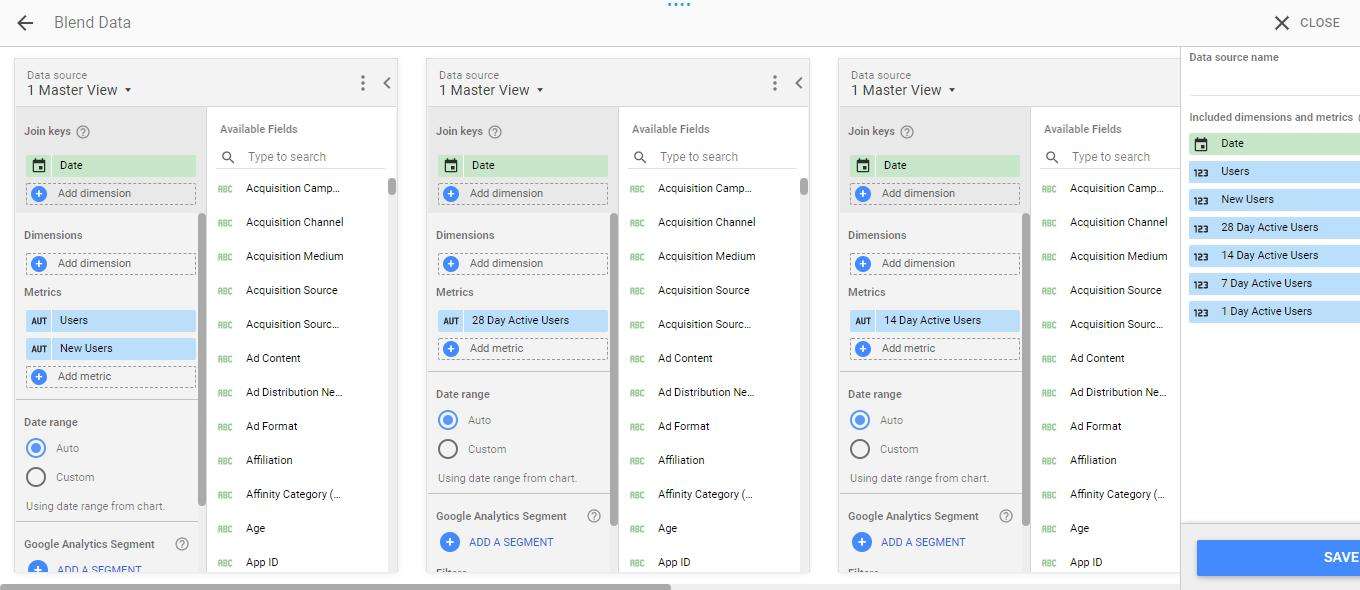

For big data to work, farms need a data warehouse to centralise and consolidate large amounts of data from multiple sources. This technology pulls all the various data sources together, so you have all your cropping plans, field conditions data, farming activities, interventions, financial records, energy usage and so on all in one place. The data warehouse is the farm’s ‘single source of truth.’

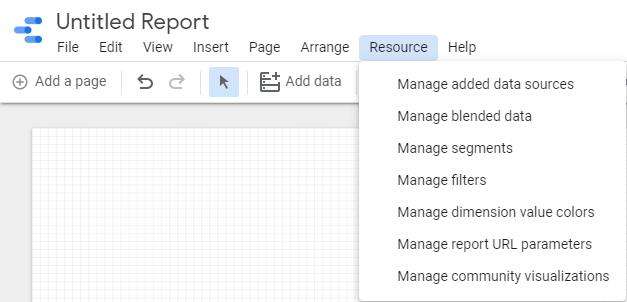

Then, you need a data visualisation tool which translates the data into actionable insights for farm managers and staff, so that everyone can make the right decisions based on what the data is telling you. Visualisation brings to life a vision of real-time farming in which the raw data allows all your inputs to be monitored and managed in real time.

The bottom line here is that the future of agriculture depends on its digital transformation. Farmers who embrace the new technologies will benefit from better yielding crops, more predictable outputs, and the ability to manage their activities in more efficient ways. Farmers must aggressively adopt data and analytics—or be outperformed.

Want to continue the conversation? Speak to an expert in agricultural analytics, and find out how we can help your business farm smarter today.