How to Approach AI Opportunities in Small and Medium Sized Firms

All firms are exploring AI opportunities and working out how to apply AI to their businesses. The widespread belief that AI will be transformational is behind the recommendation that firms make significant investments of time and money in creating the necessary capabilities. Though undoubtedly valid, this advice has large firms in mind – with substantial resources and the ability to spread risk. Smaller, mid-sized firms need approaches that are cost-sparing, that play to their strengths and that are lower risk.

AI as a Source of Opportunities

Artificial intelligence is a source of opportunities for businesses, small and large. The data necessary can be assembled from a myriad of sources. Small business will need to develop the capabilities to tap into AI effectively and to keep up with change and competition. They need to bring their people up to speed. So, how can they best do that?

AI Courses

There are online-learning platforms, universities, and executive-level programs to train people. Courses differ, but most, delivered by academics, include theoretical material that is hard to make relevant to a specific business situation.

AI Skills

Firms can hire new people equipped with these skills. However, there are reports of culture clashes between academic newcomers and pragmatic, experienced incumbents in large firms. This suggests this is an even higher risk option for smaller firms where there is more reliance on close working relationships and tacit understanding.

AI and Change

An internal “AI Competence Centre or Academy” that is part of an internal change programme is an option proposed for helping firms “transform.” Whatever the benefits, it is a level of commitment unlikely to be cost-effective for a smaller business.

AI for Smaller Businesses

These firms need an approach that plays to their strengths; practical, focussed on company priorities, engaging, hands-on, intuitive and stripped of academic jargon. It is entirely possible to convey AI principles that are relevant to practical managers. After all, AI is merely replicating what practical managers already do, but in a way that requires reams of data and hours of computer time to perform millions of repetitive calculations. It is only feasible because computer processing power is cheap.

Autonomous cars are some way off, autonomous companies are too far off to worry about. Experience and judgement, however, are indispensable and small firms have that in spades.

How to Introduce AI

Our recommendation is to introduce AI as just another management tool. Introduce it for a series of selected value-generating purposes. Keep the managers in control and contributing to all aspects of the work, from exploring data and sourcing data to interpreting the results. They can then use the results from AI to inform their experienced judgements. Engaging businesspeople and using AI in its assistive role is a pragmatic way to use AI in business.

Managers being sceptical and rejecting some AI results is entirely healthy. The literature is full of embarrassing examples of AI techniques producing results that are not replicable. Firms using AI to generate benchmark scores for investors, or for advertisers, produce scores for the same benchmark that differ, leading to commercial disputes. Often data issues will cause a degree of uncertainty in the results, and that is one reason why experienced people need to be involved. Making decisions under uncertainty is what they have been doing for years.

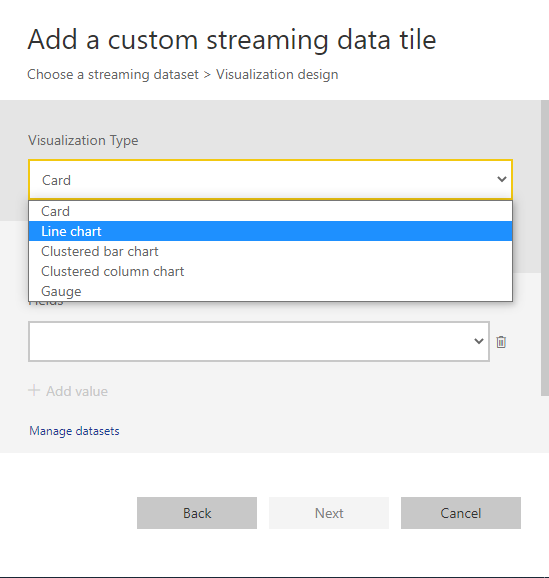

Presenting AI Results Visually

It is important to present AI results in a way that everyone can understand. This is the role of data visualisations. These are intuitive. After viewing a series of visualisations of a data set, most people are readily capable of drawing meaning from them and generating hypotheses about the implications and underlying causal relationships.

Conclusion: How to Apply AI

Use AI to support the analysis and decision process, being careful to engage users at every stage, banish jargon, explain that algorithms are performing simple tasks, using lots of data and millions of calculations. Use visual analytics to help people to engage with the data and explore underlying causal effects. Explain that data and judgement are the key success factors in applying AI.

Positive experience with AI, “in its place”, in its assistive role, creates confidence and experienced people with their unparalleled feel for their business can quickly come to see where AI could create further opportunities for their firm – and they will be able to oversee the task.